The rise and fall of 3Dfx

The Christmas holidays usually mean taking a break from the usual everyday routine and focus more on the important relationships in our lives, like family and close friends. To me, this also somehow includes having the time to think about things technology-wise that either are new or haven’t crossed my mind in a long time.

During this past Christmas season, I strangely found myself thinking about a Christmas of the past, more precisely December of 1997. Why is this interesting? Because that was the year when I bought my first 3D Accelerator graphics card.

A little bit of history

Videogames have not always looked like they do today. Certainly they didn’t go straight from Pac-Man to Fallout 3 either.

There was a time in the early 90’s when game developers discovered that they

could represent entire worlds using computer graphics and three-dimensional

shapes. They  also discovered that it was possible to cover those shapes with images instead of just applying some colors, to make them look more realistic. There was also a growing interest in reproducing physical aspects of the real world in 3D graphics, like the effects of lights and shadows, water, fog, reflexes etc.

also discovered that it was possible to cover those shapes with images instead of just applying some colors, to make them look more realistic. There was also a growing interest in reproducing physical aspects of the real world in 3D graphics, like the effects of lights and shadows, water, fog, reflexes etc.

Unluckily, the processing power of the CPUs available in the market at that time wasn’t quite up to the challenge. In spite of the advanced algorithms that were developed to render 3D graphics in software, the end results were always far from what we would call “realistic”.

The rise

Up until 1996, when a company called 3Dfx Interactive based in San Jose,

California, published the first piece of computer hardware exclusively dedicated

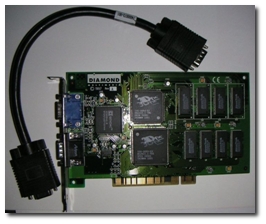

to rendering 3D graphics on a PC screen: a 3D Accelerator. Their product was

called Voodoo Graphics,  and consisted of a PCI card equipped with a 3D graphics processing unit (today known as GPU) running at 50 MHz and 4 MB of DRAM memory.

and consisted of a PCI card equipped with a 3D graphics processing unit (today known as GPU) running at 50 MHz and 4 MB of DRAM memory.

The company also provided a dedicated API called Glide, that developers could use to interact with the card and exploit its capabilities. Glide was originally created as a subset of the industry-standard 3D graphics library OpenGL, specifically focused on the functionality required for game development. Another key difference between Glide and other 3D graphics APIs was that the functions exposed in Glide were implemented directly with native processor instructions for the GPU on the Voodoo Graphics. In other words, while OpenGL, and later on Microsoft’s Direct3D, provided an abstraction layer that exposed a common set of APIs independent of the specific graphics hardware that would actually process the instructions, Glide exposed only the functionality supported by the GPU.

This approach gave all 3Dfx cards superior performance in graphics processing, a key advantage that lasted many years, even when competing cards entered the market, such as the Matrox G200, ATI Rage Pro and Nvidia RIVA 128. However, this also resulted in some heavy limitations in the image quality, like the maximum resolution of 640×480 (later increased to 800×600 with the second-generation cards called Voodoo 2) and the support for 16-bit color images.

The 3Dfx Voodoo Graphics was designed from the ground up with the sole purpose of running 3D graphics algorithms as fast as possible. Although  this may sound as a noble purpose, it meant that in practice the card was missing a regular VGA controller onboard. This resulted in the need of having a separate video adapter just to render 2D graphics. The two cards had to be connected with a bridge cable (shown in the picture) going from the VGA card to the Voodoo, while another one connected the latter to the screen. The 3D Accelerator would usually pass-through the video signal from the VGA card on to the screen, and engaged only when an application using Glide was running on the PC.

this may sound as a noble purpose, it meant that in practice the card was missing a regular VGA controller onboard. This resulted in the need of having a separate video adapter just to render 2D graphics. The two cards had to be connected with a bridge cable (shown in the picture) going from the VGA card to the Voodoo, while another one connected the latter to the screen. The 3D Accelerator would usually pass-through the video signal from the VGA card on to the screen, and engaged only when an application using Glide was running on the PC.

Being the first on the consumer market with dedicated 3D graphics hardware, 3Dfx completely revolutionized the computer gaming space on the PC, setting a new standard for how 3D games could and should look like. All new games developed from the mid 90’s up to the year 2000 were optimized for running on Glide, allowing the lucky possessors of a 3Dfx (like me) to enjoy great and fluid 3D graphics.

To give you an idea of how 3Dfx impacted games, here is a screenshot of how a popular first-person shooter game like Quake II looked like when running in Glide-mode compared to traditional software-based rendering.

The fall

If the 3Dfx was so great, why isn’t it still around today, you might ask. I asked myself the same question.

After following up the original Voodoo Graphics card with some great successors like the Voodoo2 (1998), Voodoo Banshee, (1998) and Voodoo3 (1999), 3Dfx got overshadowed by two powerful competitors Nvidia’s GeForce and ATI’s Radeon. A series of bad strategic decisions that lead to delayed and overpriced products, caused 3Dx to lose market share, ultimately reaching bankruptcy in late 2000. Apparently 3Dfx, by refusing to incorporate 2D/3D graphics chips and supporting Microsoft’s DirectX, became no longer capable of producing cards that lived up to what was the new market’s standard. In 2004 3Dfx opted to be bought by Nvidia, who acquired much of the company’s intellectual property, employees, resources and brands.

Even if 3Dfx no longer exist as a company, it effectively placed a landmark in the history of computer games and 3D graphics, opening the way to games we see today on the stores’ shelves. And the memory of its glorious days still warm the hearts of its fans, especially during cold Christmas evenings.

/Enrico

Migrating ASP.NET Web Services to WCF

I am currently in the middle of a project where we are migrating a (large) amount of web services built on top of ASP.NET in .NET 2.0 (commonly referred to as ASMX Web Services) over to the Windows Communication Foundation (WCF) platform available in .NET 3.0.

The primary reason we want to do that, is because we would like to take advantage of the binary message serialization support available in WCF to speed  up communication between the services and their clients. This same technique was possible in earlier versions of the .NET Framework thanks to a technology called Remoting, which is now superceded by WCF. In both cases it requires that both the client and server run on .NET in order to work. But I digress.

up communication between the services and their clients. This same technique was possible in earlier versions of the .NET Framework thanks to a technology called Remoting, which is now superceded by WCF. In both cases it requires that both the client and server run on .NET in order to work. But I digress.

Since the ASMX model has been for a long time the primary Microsoft technology for building web services on the .NET platform, I figured they must have laid out a nice migration path to bring all those web services to the new world of WCF. It turned out, they did!

The quick way

If you built your ASMX web services with code separation (that is, all programming logic resided in a separate code-behind file instead of being embedded in the ASMX file) it is possible to get an ASMX web service up and running in WCF pretty quickly by going through a few easy steps:

- Your WCF web service class no longer inherits from the

System.Web.Services.WebServiceclass so remove it. - Change the

System.Web.Services.WebServiceattribute on the web service class to theSystem.ServiceModel.ServiceContractattribute. - Change the

System.Web.Services.WebMethodattribute on each web service method to theSystem.ServiceModel.OperationContractattribute. - Substitute the .ASMX file with a new .SVC file with the following header:

<% @ServiceHost Service="MyNamespace.MyService" %>

- Modify the application configuration file to create a WCF endpoint that clients will use to send their requests to:

<system.serviceModel>

<behaviors>

<serviceBehaviors>

<behavior name="MetadataEnabled">

<serviceMetadata httpGetEnabled="true" />

<serviceDebug includeExceptionDetailInFaults="true" />

</behavior>

</serviceBehaviors>

</behaviors>

<services>

<service name="MyNamespace.MyService"

behaviorConfiguration="MetadataEnabled">

<endpoint name="HttpEndpoint"

address=""

binding="wsHttpBinding"

contract="MyNamespace.IMyService" />

<endpoint name="HttpMetadata"

address="contract"

binding="mexHttpBinding"

contract="IMetadataExchange" />

<host>

<baseAddresses>

<add baseAddress="http://localhost/myservice" />

</baseAddresses>

</host>

</service>

</services>

</system.serviceModel>

- Decorate all classes that are exchanged by the web service methods as parameters or return values with the

System.RuntimeSerialization.DataContractattribute to allow them to be serialized on the wire. - Decorate each field of the data classes with the

System.RuntimeSerialization.DataMemberattribute to include it in the serialized message.

Here is a summary of the changes you’ll have to make to your ASMX web service:

| Where the change applies | ASMX | WCF |

|---|---|---|

| Web service class inheritance | WebService | - |

| Web service class attribute | WebServiceAttribute | ServiceContractAttribute |

| Web service method attribute | WebMethodAttribute | OperationContractAttribute |

| Data class attribute | XmlRootAttribute | DataContractAttribute |

| Data class field attribute | XmlElementAttribute | DataMemberAttribute |

| HTTP endpoint resource | .ASMX | .SVC |

As a side note, if you are using .NET 3.5 SP1 the porting process gets a little easier, since WCF will automatically serialize any object that is part of a service interface without the need of any special metadata attached to it. This means you no longer have to decorate the classes and members exposed by a WCF service contract with the DataContract and DataMember attributes.

Important considerations

The simple process I just described works well for relatively simple web services, but in any real-world scenario you will have to take into consideration a few number of aspects:

- WCF services are not bound to the HTTP protocol as ASMX web services are. This means you can host them in any process you like, whether it be a Windows Service or a console application. In other words using Microsoft IIS is no longer a requirement, although it is a valid possibility in many situations.

- Even if WCF services are executed by the ASP.NET worker process when hosted in IIS, they do not participate in the ASP.NET HTTP Pipeline. This means that in your WCF services you no longer have access to the ASP.NET infrastructure services, such as:

HttpContextHttpSessionStateHttpApplicationState- ASP.NET Authorization

- Impersonation

So what if your ASMX web services are making extensive use of the ASP.NET session store or employ the ASP.NET security model? Is it a show-stopper? Luckily enough, no. There is a solution to keep all that ASP.NET goodness working in WCF. It is called ASP.NET Compatibility Mode.

Backwards compatibility

Running WCF services with the ASP.NET Compatibility Mode enabled will integrate them in the ASP.NET HTTP Pipeline, which of course means all ASP.NET infrastructure will be back in place and available from WCF at runtime. You can enable this mighty mode from WCF by following these steps:

- Decorate your web service class with the

System.ServiceModel.Activation.AspNetCompatibilityRequirementsattribute as following:

[AspNetCompatibilityRequirements(

RequirementsMode = AspNetCompatibilityRequirementsMode.Required)]

public class MyService : IMyService

{

// Service implementation

}

- Add the following setting to the application configuration file:

<system.serviceModel>

<serviceHostingEnvironment aspNetCompatibilityEnabled="true" />

</system.serviceModel>

Remember that this will effectively tie the WCF runtime to the HTTP protocol, just like with ASMX web services, which means it becomes prohibited to add any non-HTTP endpoints for your WCF services.

Good luck and please, let me know your feedback!

/Enrico

REST web services

If you have followed the latest advancements in the technologies and standards around web services, you must have come across the term “REST” at least more than once.

In rough terms REST is a way of building web services by relying  exclusively on the infrastructure of the World Wide Web to define operations and exchange messages. This is an alternative to “traditional” web services, which instead use a set of standardized XML dialects, WSDL and SOAP to achieve the same goals.

The reason I’m writing about REST is that if you, like me, have prematurely judged it as some kind of toy technology, you are in for a real eye-opener!

exclusively on the infrastructure of the World Wide Web to define operations and exchange messages. This is an alternative to “traditional” web services, which instead use a set of standardized XML dialects, WSDL and SOAP to achieve the same goals.

The reason I’m writing about REST is that if you, like me, have prematurely judged it as some kind of toy technology, you are in for a real eye-opener!

The history

First of all, let’s be clear about the origin of REST. As a term it means Representational State Transfer, as a concept it stands completely separate from web services. It was first defined in the year 2000 by a PhD student at the University of California by the name Roy Fielding. Fielding, in his doctoral dissertation entitled Architectural Styles and the Design of Network-based Software Architectures, sought to define the architectural principles that make up the infrastructure of the most successful large-scale distributed system created by mankind: the World Wide Web. Fielding thought that by studying the Web, he would be able to identify some key design principles that would be beneficial to any kind of distributed application with similar needs of scalability and efficiency. He referred to the result of his research as “REST”.

So, what is REST?

REST is an architectural style for building distributed systems.

The key design principle of a REST-based architecture is that its components use a uniform interface to exchange data.

Uniform interface means that all components expose the same set of operations to interact with one another. In this interface data is referred to as resources and contains three main concepts:

- A naming scheme for globally identifying resources

- A set of operations to manipulate resources

- A format to represent resources

The idea is that generalizing and standardizing the components interface reduces the overall system complexity by making it easier to identify the interactions among different parts of the system. As of today, the Web is the only system that fully embraces the principle of unified interfaces, and it does it in the following way:

- URI is used as a naming scheme for globally identifying resources

- HTTP verbs define the available operations to manipulate resources

- HTML is the textual format used to represent resources

Applying REST to web services

Now, it is a known fact that the Web was created in order to provide a worldwide network for distributing static documents. However, it has been proven that the Web holds a potential that goes far beyond its original goal. Imagine if we could leverage the REST infrastructure of the Web to build web services. Wouldn’t that be cool?

First of all, let’s take a look at what web services are used for:

- Define a set of operations to manipulate resources

- Uniquely identify these operations so that they can be globally accessed by clients

- Use a common format to represent the resources exchanged between web services and their consumers

Well, the Web seems to have the entire infrastructure we need to accomplish all of those goals already in place. In fact the HTTP protocol defines a pretty rich interface to manipulate data. Moreover this interface maps surprisingly well to the kind of operations usually exposed by web services.

| HTTP verb | CRUD operation |

|---|---|

| PUT | Create |

| GET | Read |

| POST | Update |

| DELETE | Delete |

Also, web services contracts are inherently accessed through URLs, it isn’t too much of a stretch to use URLs to access single operations in a contract.

Finally, web services encode the contents of their messages using SOAP, which is a XML dialect. But why do we need a whole new protocol to represent resources, when we could simply use XML as is instead?

What we just did, was applying the design principles dictated by REST to web services. The result of this process is commonly referred to as “RESTful” web services.

Wrapping up

This is how REST web services compare to the ones based on the WS-* standard:

| Goal of web services | WS-* | REST |

|---|---|---|

| Addressing resources and operations | SOAP | URL |

| Defining supported operations | WSDL | HTTP |

| Representing resources | XML | XML/JSON/Binary |

As you can see, REST web services leverage the stability, scalability and reach offered by the same technologies and standards that power the Web today. What’s most fascinating with REST is the huge potential that comes out such a simple design.

However, don’t think even for a moment that REST will ever replace SOAP-based web services. Both architectures have their strengths and weaknesses, and REST is certainly not the right answer for all kinds of applications. I am especially thinking about corporate environments, where the needs for security and reliability are better addressed by the standards incorporated in WSDL and SOAP. As always, the solution lies in using the right tool for the job.

/Enrico