Leveraging the cloud for fun and games

Friday night, February 21, 2014. That’s when the tretton37 Counter-Strike: Global Offensive fragfest was bound to start. Avid gamers looking to share virtual blood together, were eager to join in from our offices in Lund and Stockholm. A few more would play over the Internet.

The time and place were set. Pizzas were ordered. Everything was ready to go. Except for one thing:

We didn’t have a dedicated Counter-Strike server to host the game on.

Finding a spare machine to dedicate for that one night wasn’t an easy task, given our requirements:

- The host should be reachable from the Internet

- The machine should have enough hardware to handle a CS:GO game with 15+ players

- The machine should be able to scale up as more players join the game

- The whole thing should be a breeze to setup

For days I pondered my options when, suddenly, it hit me:

Where's the place to find commodity hardware that's available for rent, is on the Internet and can scale at will?

The cloud, of course! This realization fell on my head like the proverbial apple from the tree.

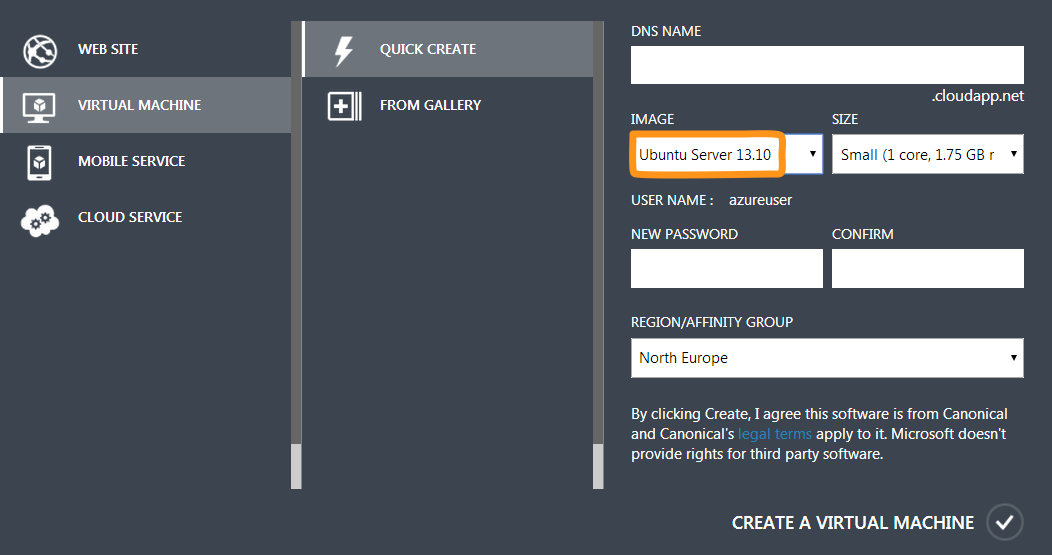

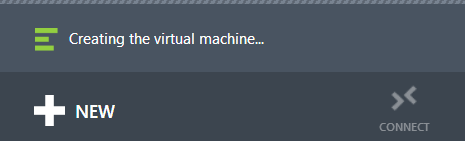

Step 1: Getting a machine in the cloud

Valve puts out their Source Dedicated Server software both for Windows and Linux. The Windows version has a GUI and is generally what you’d call “user friendly”. The Linux version, on the other hand, is lean & mean and is managed entirely from the command line. Programmers being programmers, I decided to go for the Linux version.

Now, having established that I needed a Linux box, the next question was: which of the available clouds was I going to entrust with our gaming night? Since tretton37 is mainly a Microsoft shop, it felt natural to go for Microsoft Azure. However, I wasn’t holding any high hopes that they would allow me to install Linux on one of their virtual machines.

As it turned out, I had to eat my hat on that one. Azure does, in fact, offer pre-installed Linux virtual machines ready to go. To me, this is proof that the cloud division at Microsoft totally gets how things are supposed to work in the 21st century. Kudos to them.

After literally 2 minutes, I had an Ubuntu Server machine with root access via SSH running in the cloud.

If I hadn’t already eaten my hat, I would take it off for Azure.

Step 2: Installing the Steam Console Client

Hosting a CS:GO server implies setting up a so called Source Dedicated Server, also known as SRCDS. That’s Valve’s server software used to run all their games that are based on the Source Engine. The list includes Half Life 2, Team Fortress, Counter-Strike and so on.

A SRCDS is easily installed through the Steam Console Client, or SteamCMD. The easiest way to get it on Linux, is to download it and unpack it from a tarball. But first things first.

It’s probably a good idea to run the Source server with a dedicated user account that doesn’t have root privileges. So I went ahead and created a steam user, switched to it and headed to its home directory:

adduser steam

su steam

cd ~

Next, I needed to install a few libraries that SteamCMD depends on, like the GNU C compiler and its friends. That’s where I hit the first roadblock.

steamcmd: error while loading shared libraries: libstdc++.so.6: cannot open shared object file: No such file or directory

Uh? A quick search on the Internet revealed that SteamCMD doesn’t like to run on a 64-bit OS. In fact:

SteamCMD is a 32-bit binary, so it needs 32-bit libraries.

On the other hand:

The prepackaged Linux VMs available in Azure come in 64 bit only.

Ouch. Luckily, the issue was easily solved by installing the right version of Libgcc:

apt-get install lib32gcc1

Finally, I was ready to download the SteamCMD binaries and unpack them:

wget http://media.steampowered.com/installer/steamcmd_linux.tar.gz

tar xzvf steamcmd_linux.tar.gz

The client itself was kicked off by a Bash script:

cd ./steamcmd

./steamcmd.sh

That brought down the necessary updates to the client tools and started an interactive prompt from where I could install any of Valve’s Source games servers.

At this point, I could have continued down the same route and install the CS:GO Dedicated Server (CSGO DS) by using SteamCMD.

However, a few intricate problems would be waiting further down the road. So, I decided to back out and find a better solution.

Steam> quit

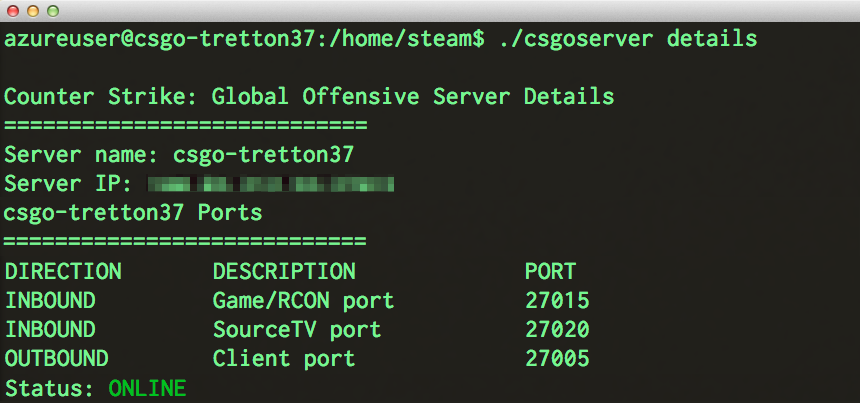

Step 3: Installing the CS:GO Dedicated Server

Remember that thing about SteamCMD being a 32-bit binary and the Linux VM on Azure being only available in 64-bit?

Well, that turned out to be a bigger issue than I thought. Even after having successfully installed the CS:GO server, getting it to run became a nightmare. The server was constantly complaining about the wrong version of some obscure libraries. Files and directories were missing. Everything was a mess.

Salvation came in the form of a meticulously crafted script, designed to take care of those nitty-gritty details for me.

Thanks to Daniel Gibbs' hard work, I could use his fabulous csgoserver script to install, configure and, above all, manage our CS:GO Dedicated Server without pain.

You can find a detailed description how to use the csgoserver up on his site, so I’m just gonna report how I configured it to suit our deathmatch needs.

Step 4: Configuration

The CS:GO server can be configured in a few different ways and it’s all done in the server.cfg configuration file. In it, you can set up things like the game mode (Arms Race, Classic, Competitive to name a few) the maximum number of players and so on.

Here’s how I configured it for the tretton37 deathmatch:

sv_password "secret" # Requires a password to join the server

sv_cheats 0 # Disables hacks and cheat codes

sv_lan 0 # Disables LAN mode

Step 5: Gold plating

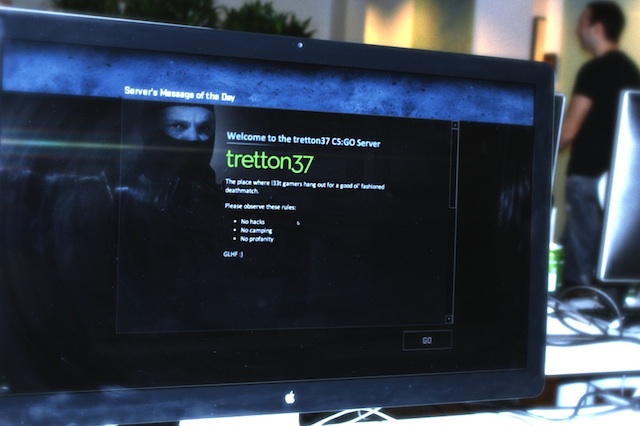

The final touch was to provide an appropriate Message of the Day (or MOTD) for the occasion. That would be the screen that greets the players as they join the game, setting the right tone.

Once again, the whole thing was done by simply editing a text file. In this case, the file contained some HTML markup and a few stylesheets and was located in /home/steam/csgo/motd.txt.

Here’s how it looked like in action:

Step 6: Deathmatch!

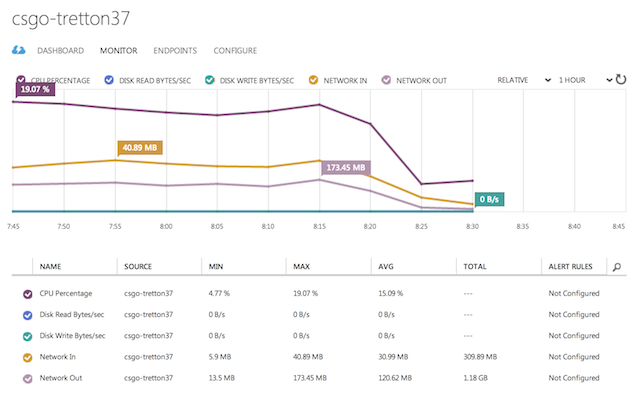

This article is primarily meant as a reference on how to configure a dedicated CS:GO server on a Linux box hosted on Microsoft Azure. Nonetheless, I figured it would be interesting to follow up with some information on how the server itself held up during that glorious game night.

Here’s a few stats taken both from the Azure Dashboard as well as from the operating system itself. Note that the server was running on a Large VM sporting a quad core 1.6 GHz CPU and 7 GB of RAM:

- Number of simultaneous players: 16

- Average CPU load: 15 %

- Memory usage: 2.8 GB

- Total outbound network traffic served: 1.18 GB

In retrospect, that configuration was probably a little overkill for the job. A Medium VM with a dual 1.6 GHz CPU and 3.5 GB of RAM would have probably sufficed. But hey, elastic scaling is exactly what the cloud is for.

One final thought

Oddly enough, this experience opened up my eyes to the great potential of cloud computing.

The CS:GO server was only intended to run for the duration of the event, which would last for a few hours. During that short period of time, I needed it to be as fast and responsive as possible. Hence, I went all out on the hardware.

As soon as the game night was over, I immediately shut down the virtual machine. The total cost for borrowing that awesome hardware for a few hours? Literally peanuts.

Amazing.

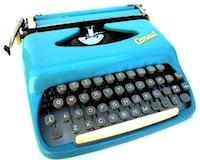

A gentle introduction to mechanical keyboards

I love typing. I still remember how I, as a kid, used to borrow my mother’s Diplomat typewriter from 1964 and started hammering away on it, pretending to type some long text:

“It sure sounds like you’re a fast typist!”

she would say when she heard the racket coming from my room. Little did she know that what came out on the sheet of paper was actually gibberish. Or maybe she was just being kind.

Anyway, I enjoyed the feeling of pressing those stiff plastic buttons and hear the sound made by the metallic arm every time it punched a letter.

Today, I continue to enjoy a similar experience on my computer by using a mechanical keyboard.

So, what’s a mechanical keyboard?

First and foremost, let’s get the terminology straight by answering that burning question.

Wikipedia’s definition gives us a good starting point, although it’s still pretty vague:

Mechanical-switch keyboards use real switches underneath every key. Depending on the construction of the switch, such keyboards have varying response and travel times.

That leaves us wondering: what do they mean by real switches? Well, in this case real means physical, in the sense that under each key there’s a metal spring that compresses every time you press a key. The spring is housed inside a plastic device that makes sure the key is registered by the computer at a specific point in time while the key is being pressed. That device is called a switch.

So, let’s reformulate the definition of a mechanical keyboard:

A keyboard is considered mechanical if it uses metal springs underneath each key.

It’s still true that there are different types of mechanical switches, each with their own special characteristics. The kind of switch used in a mechanical keyboard determines the overall feeling you’ll get when you type on it.

A little bit of history

At this point you might rightfully wonder:

Wait a minute. Aren’t all keyboards mechanical?

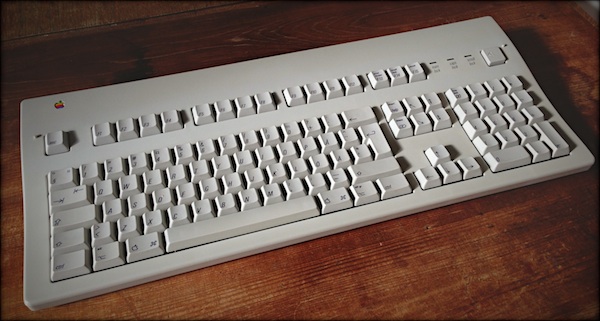

No, they are not. At least not anymore. The keyboards sold with the first personal computers back in the late 70’s, however, were indeed.

Back then, computer keyboards were designed to mimic the feeling and behavior of typewriters. These were robust pieces of hardware, built with quality in mind. They used solid materials, like metal plates and thick ABS plastic frames. The keys were stiff enough to allow the typist to rest her fingers on the home row without accidentally pressing any key.

If you’re in your 30’s, chances are you remember how it felt to type on one of these keyboards, maybe in front of a Macintosh or a Commodore 64. All throughout the 80’s and early 90’s, every keyboard was mechanical. And heavy.

But then things started to change. When PCs became a mass market product, the additional cost of producing quality mechanical keyboards to go along with them was no longer justifiable. Consumers didn’t seem to care, either. As graphical user interfaces (GUI) grew in popularity during the mid 80’s, the keyboard, which had been the primary way to interact with computers up to that point, had to leave room to another kind of input device: the mouse.

Keyboards, like computers, became a commodity sold in large volumes. In an effort to continuously try to bring the production costs down, new designs and materials became mainstream. Focus shifted from quality and durability to cost effectiveness.

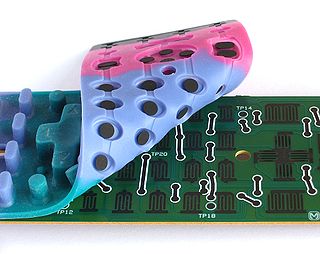

Consequently, the heavy metal plates disappeared in favor of cheaper silicon membranes put on top of electrical matrixes. Metal springs left room to air pads under each key. This keyboard technique is called rubber dome or membrane, and is to this day the most common type of keyboard sold with desktop computers.

For laptops, a new kind of low profile rubber dome switch began to appear. They were made out of thin plastic parts and were called scissors because of their collapsable X shape.

While today’s mainstream keyboards certainly serve their purpose, they have a limited lifetime.

With time and usage, the rubber bubbles eventually wear out, which means you have to press harder on the keys to activate them. The letters printed on the keys fade or peel off. The cheap plastic parts loosen up or bend. And by the time you’re finally ready to throw those suckers in the bin, they’ve managed to drive you nuts.

Mechanical keyboards, on the other hand, are built to last.

As a testimony of their extreme durability, I can tell you that the oldest one in my collection is an Apple Extended Keyboard II dated all the way back to 1989 and it still works like a charm.

That’s a 25 years old piece of hardware still in perfectly good condition. So much, in fact, that I use it daily as my primary keyboard at home.

It’s all about the feeling

However, believe it or not, the biggest problem with rubber dome keyboards isn’t their fragility. It’s the fact that they’re an ergonomic nightmare.

We said that the switches are responsible for telling the computer which key is being pressed. The precise moment when that happens is called actuation point. The distance between a key’s rest position and when it’s fully pressed down is called key travel.

Now, in rubber dome keyboards, the only way to actuate a key is to press it all the way to the bottom (also known as bottoming out). That’s when the silicon air bubble beneath it gets squashed, thus sending an electrical signal to the computer. This means that your fingers have to travel a longer distance when typing, which puts a strain on your hands and wrists.

Mechanical keys, on the other hand, are actuated before they bottom out. For most types of switches, that happens about half way through the key travel.

What does this mean in practice? Well, it means that you don’t have to press as hard on your keyboard when you type. Your fingers don’t have to travel as much and, consequently, you don’t get as fatigued. It may even help you type faster.

To each his own switch

There are at least a dozen different kinds of mechanical switches available on the market today. That number doubles if you count the vintage ones.

Each and every one of them has its own way to physically actuate a key, which conforms its own distinctive feel.

The buckling spring switch patented by IBM in 1971, for example, actuates a key by having the internal spring buckle outwards as it collapses. That activates a plastic hammer located beneath the spring, which, upon hitting a contact, closes an electrical circuit. As the spring buckles, it hits the inner wall of its plastic housing producing a loud “click” sound. That feedback signals the typist that the key has been registered.

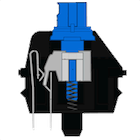

The modern Cherry MX Blue switch, on the other hand, uses a leaf spring pushed by a plastic slider (the grey one in the picture) as it passes by on its way down. When the slider is half way through, the leaf spring encounters no more resistance from the slider, thus closing a circuit that signals the computer about the key press. As the grey slider hits the bottom of the switch, it emits a high pitched “click” sound similar to the one of a mouse button.

Don’t worry, I won’t get into all the nuts and bolts of all the different switches. The Internet will happily provide you more information than you can possibly ask for in that regard.

The important thing to remember is that the ergonomic properties of a mechanical keyboard are determined by three fundamental characteristics of its switches:

- Tactility

- Force

- Sound

Let’s look at each of them briefly.

Tactility

A switch is called tactile if it gives some kind of feedback about when a key is actuated. This is usually done by significantly dropping the key’s resistance the instant it gets registered. Some switches even accompany that with a “click” sound and are therefore referred to as “clicky”.

Force

The force indicates how hard you have to press down a key in order to actuate it. This is measured in centinewtons (cN) or, more commonly grams-force (gf). In practice they’re interchangeable, since 1 cN is roughly equivalent to 1 gf (more precisely 1 cn = 1.02 gf).

Switches get progressively stiffer the further down you press a key, which, again, helps you avoid bottoming out. A soft switch requires about 45 cN to actuate. A stiff one is about twice that, around 80 cN.

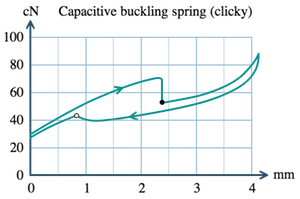

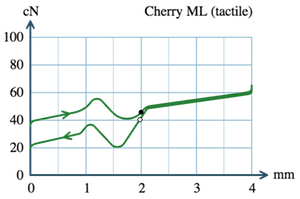

These are called force graphs and indicate how much force in cN (Y axis) is required to press a key during its travel in mm (X axis) from rest position to bottom. Did you notice the sudden drop in force about half way through the travel? That’s how tactile switches announce that the key has been actuated, as shown by the little black dot.

Sound

As I mentioned earlier, certain switches emit a “click” sound when they get actuated. This extra piece of feedback can either be pleasurable (even nostalgic if you will) or totally annoying. I strongly advise you to investigate how the people who will be sitting next to you feel about clicky keyboards before buying one. And telling them that all keyboards sounded like that back in the 80’s no longer cuts it.

Wrapping it up

I know it sounds crazy, but I barely scratched the surface of what there is to know about mechanical keyboards. However, the purpose of this post was to give you an idea about what they are and where they come from, and I hope I’ve succeeded in that.

If you’re eager to know more, fear not. I intend to write more about this topic. Next time, I’ll start reviewing some of my favorite mechanical keyboards. In the meantime, take a look at the official guide to mechanical keyboards put together by this growing community of keyboard enthusiasts.

BDD all the way down

TDD makes sense and improves the quality of your code. I don’t think anybody could argue against this simple fact. Is TDD flawless? Well, that’s a whole different discussion.

But let’s back up for a moment.

Picture this: you’ve just joined a team tasked with developing some kind of software which you know nothing about. The only thing you know is that it’s an implementation of Conway’s Game of Life as a web API. Your first assignment is to develop a feature in the system:

In order to display the current state of the Universe

As a Game of Life client

I want to get the next generation from underpopulated cells

You want to implement this feature by following the principles of TDD, so you know that the first thing you should do is start writing a failing test. But, what should you test? How would you express this requirement in code? What’s even a cell?

If you’re looking at TDD for some guidance, well, I’m sorry to tell you that you’ll find none. TDD has one rule. Do you remember what the first rule of TDD is? (No, it’s not you don’t talk about TDD):

Thou shalt not write a single line of production code without a failing test.

And that’s basically it. TDD doesn’t tell you what to test, how you should name your tests and not even how to understand why they fail in first place.

As a programmer, the only thing you can think of, at this point, is writing a test that checks for nulls. Arguably, that’s the equivalent of trying to start a car by emptying the ashtrays.

Let the behavior be your guide

What if we stopped worrying about writing tests for the sake of, well, writing tests, and instead focused on verifying what the system is supposed to do in a certain situation? That’s called behavior and a lot of good things may come out of letting it be the driving force when developing software. Dan North noticed this during his research, which ultimately led him to the formalization of Behavior-driven Development:

I started using the word “behavior” in place of “test” in my dealings with TDD and found that not only did it seem to fit but also that a whole category of coaching questions magically dissolved. I now had answers to some of those TDD questions. What to call your test is easy – it’s a sentence describing the next behavior in which you are interested. How much to test becomes moot – you can only describe so much behavior in a single sentence. When a test fails, simply work through the process described above – either you introduced a bug, the behavior moved, or the test is no longer relevant.

Focusing on behavior has other advantages, too. It forces you to understand the context in which the feature you’re developing is going work. It makes you see the value that it brings for the users. And, last but not least, it forces you to ask questions about the concepts mentioned in the requirements.

So, what’s the first thing you should do? Well, let’s start by understanding what a generation of cells is and then we can move on to the concept of underpopulation:

Any live cell with fewer than two live neighbors dies, as if by needs caused by underpopulation.

Acceptance tests

At this point we’re ready to write our first test. But, since there’s still no code for the feature, what exactly are we supposed to test? The answer to that question is simpler than you might expect: the system itself.

We’re implementing a requirement for our Game of Life web API. The user is supposed to make an HTTP request to a certain URL sending a list of cells formatted as JSON and get back a response containing the same list of cells after the rule of underpopulation has been applied. We’ll know we’ll have fulfilled the requirement when the system does exactly that. It’s, in other words, the requirement’s acceptance criteria. It sure sounds like a good place to start writing a test.

Let’s express it the way formalized by Dan North:

Scenario: Death by underpopulation

Given a live cell has fewer than 2 live neighbors

When I ask for the next generation of cells

Then I should get back a new generation

And it should have the same number of cells

And the cell should be dead

Here, we call the acceptance criteria scenario and use a Given-When-Then syntax to express its premises, action and expected outcome. Having a common language like this for expressing software requirements is one of the greatest innovations brought by BDD.

So, we said we were going to test the system itself. In practice, that means we must put ourselves in the user’s shoes and let the test interact with the system at its outmost boundaries. In case of a web API, that translates in sending and receiving HTTP requests.

In order to turn our scenario into an executable test, we need some kind of framework that can map the Given-When-Then sentences to methods. In the realm of .NET, that framework is called SpecFlow. Here’s how we could use it together with C# to implement our test:

IEnumerable<dynamic> generation;

HttpResponseMessage response;

IEnumerable<dynamic> nextGeneration;

[Given]

public void Given_a_live_cell_has_fewer_than_COUNT_live_neighbors(int count)

{

generation = new[]

{

new { Alive = true, Neighbors = --count }

};

}

[When]

public void When_I_ask_for_the_next_generation_of_cells()

{

response = WebClient.PostAsJson("api/generation", generation);

}

[Then]

public void Then_I_should_get_back_a_new_generation()

{

response.ShouldBeSuccessful();

nextGeneration = ParseGenerationFromResponse();

nextGeneration.ShouldNot(Be.Null);

}

[Then]

public void Then_it_should_have_the_same_number_of_cells()

{

nextGeneration.Should(Have.Count.EqualTo(1));

}

[Then]

public void Then_the_cell_should_be_dead()

{

var isAlive = (bool)nextGeneration.Single().Alive;

isAlive.ShouldBeFalse();

}

IEnumerable<dynamic> ParseGenerationFromResponse()

{

return response.ReadContentAs<IEnumerable<dynamic>>();

}

As you can see, each portion of the scenario is mapped directly to a method by matching the words used within the sentences separated by underscores.

At this point, we can finally run our acceptance test and watch it fail:

Given a live cell has fewer than 2 live neighbors

-> done.

When I ask for the next generation of cells

-> done.

Then I should get back a new generation

-> error: Expected: in range (200 OK, 299)

But was: 404 NotFound

As expected, the test fails on the first assertion with an HTTP 404 response. We know this is correct since there’s nothing listening to that URL on the other end. Now that we understand why this test fails, we can now officially start implementing that feature.

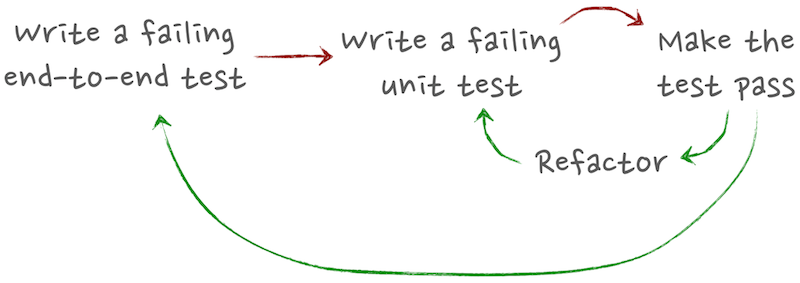

The TDD cycle

We’re about to get our hands dirty (albeit keeping the code clean) and dive into the system. At this point we follow the normal rules of Test-driven development with its Red-Green-Refactor cycle. However, we’re faced with the exact same problem we had at the boundaries of the system. What should be our first test? Even in this case, we’ll let the behavior be our guiding light.

If you have experience writing unit tests, my guess is that you’re used to write one test class for every production code class. For example, given SomeClass you’d write your tests in SomeClassTests. This is fine, but I’m here to tell you that there’s a better way. If we focus on how a class should behave in a given situation, wouldn’t it be more natural to have one test class per scenario?

Consider this:

public class When_getting_the_next_generation_with_underpopulation

: ForSubject<GenerationController>

{

}

This class will contain all the tests related to how the GenerationController class behaves when asked to get the next generation of cells given that one of them is underpopulated.

But, wait a minute. Wouldn’t that create a lot of tiny test classes?

Yes. One per scenario to be precise. That way, you’ll know exactly which class to look at when you’re working with a certain feature. Besides, having many small cohesive classes is better than having giant ones with all kinds of tests in them, don’t you think?

Let’s get back to our test. Since we’re testing one single scenario, we can structure it much in same way as we would define an acceptance test:

For each scenario there's exactly one context to set up, one action and one or more assertions on the outcome.

As always, readability is king, so we’d like to express our test in a way that gets us as close as possible to human language. In other words, our tests should read like specifications:

public class When_getting_the_next_generation_with_underpopulation

: ForSubject<GenerationController>

{

static Cell solitaryCell;

static IEnumerable<Cell> currentGen;

static IEnumerable<Cell> nextGen;

Establish context = () =>

{

solitaryCell = new Cell { Alive = true, Neighbors = 1 };

currentGen = AFewCellsIncluding(solitaryCell);

};

Because of = () =>

nextGen = Subject.GetNextGeneration(currentGen);

It should_return_the_next_generation_of_cells = () =>

nextGen.ShouldNotBeEmpty();

It should_include_the_solitary_cell_in_the_next_generation = () =>

nextGen.ShouldContain(solitaryCell);

It should_only_include_the_original_cells_in_the_next_generation = () =>

nextGen.ShouldContainOnly(currentGen);

It should_mark_the_solitary_cell_as_dead = () =>

solitaryCell.Alive.ShouldBeFalse();

}

In this case I’m using a test framework for .NET called Machine.Specifications or MSpec. MSpec belongs to a category of frameworks called BDD-style testing frameworks. There are at least a few of them for almost every language known to men (including Haskell). What they all have in common is a strong focus on allowing you to express your tests in a way that resembles requirements.

Speaking of readability, see all those underscores, static variables and lambdas? Those are just the tricks MSpec has to pull on the C# compiler, in order to give us a domain-specific language to express requirements while still producing runnable code. Other frameworks have different techniques to get as close as possible to human language without angering the compiler. Which one you choose is largely a matter of preference.

Wrapping it up

I’ll leave the implementation of the GenerationController class as an exercise for the Reader, since it’s outside the scope of this article. If you like, you can find mine over at GitHub.

What’s important here, is that after a few rounds of Red-Green-Refactor we’ll finally be able to run our initial acceptance test and see it pass. At that point, we’ll know with certainty that we’ll have successfully implemented our feature.

Let’s recap our entire process with a picture:

This approach to developing software is called Outside-in development and is described beautifully in Steve Freeman’s & Nat Pryce’s excellent book Growing Object-Oriented Software, Guided by Tests.

In our little exercise, we grew a feature in our Game of Life web API from the outside-in, following the principles of Behavior-driven Development.

Presentation

You can see a complete recording of the talk I gave at Foo Café last year about this topic. The presentation pretty much covers the material described in this articles and expands a bit on the programming aspect of BDD. I hope you’ll find it useful. If have any questions, please fill free to contact me directly or write in the comments.

Here’s the abstract:

In this session I’ll show how to apply the Behavior Driven Development (BDD) cycle when developing a feature from top to bottom in a fictitious.NET web application. Building on the concepts of Test-driven Development, I’ll show you how BDD helps you produce tests that read like specifications, by forcing you to focus on what the system is supposed to do, rather than how it’s implemented.

Starting from the acceptance tests seen from the user’s perspective, we’ll work our way through the system implementing the necessary components at each tier, guided by unit tests. In the process, I’ll cover how to write BDD-style tests both in plain English with SpecFlow and in C# with MSpec. In other words, it’ll be BDD all the way down.