General-purpose customizations with AutoFixture

If you’ve been using AutoFixture in your tests for more than a while, chances are you’ve already come across the concept of customizations. If you’re not familiar with it, let me give you a quick introduction:

A customization is a group of settings that, when applied to a given Fixture object, control the way AutoFixture will create instances for the types requested through that Fixture.

At this point you might find yourself feeling an irresistible urge to know everything there’s to know about customizations. If that’s the case, don’t worry. There are a few resources online where you learn more about them. For example, I wrote about how to take advantage of customizations to group together test data related to specific scenarios.

In this post I’m going to talk about something different which, in a sense, is quite the opposite of that: how to write general-purpose customizations.

A (user) story about cooking

It’s hard to talk about test data without a bit of context. So, for the sake of this post, I thought we would pretend to be working on a somewhat realistic project. The system we’re going to build is an online catalogue of food recipies. The domain, at the very basic level, consists of three concepts:

- Cookbook

- Recipes

- Ingredients

Now, let’s imagine that in our backlog of requirements we have one where the user wishes to be able to search for recepies that contain a specific set of ingredients. Or in other words:

As a foodie, I want to know which recipes I can prepare with the ingredients I have, so that I can get the best value for my groceries.

From the tests…

As usual, we start out by translating the requirement at hand into a set of acceptance tests. In order do that, we need to tell AutoFixture how we’d like the test data for our domain model to be generated.

For this particular scenario, we need every Ingredient created in the test fixture to be randomly chosen from a fixed pool of objects. That way we can ensure that all recepies in the cookbook will be made up of the same set of ingredients.

Here’s how such a customization would look like:

public class RandomIngredientsFromFixedSequence : ICustomization

{

private readonly Random randomizer = new Random();

private IEnumerable<Ingredient> sequence;

public void Customize(IFixture fixture)

{

InitializeIngredientSequence(fixture);

fixture.Register(PickRandomIngredientFromSequence);

}

private void InitializeIngredientSequence(IFixture fixture)

{

this.sequence = fixture.CreateMany<Ingredient>();

}

private Ingredient PickRandomIngredientFromSequence()

{

var randomIndex = this.randomizer.Next(0, sequence.Count());

return sequence.ElementAt(randomIndex);

}

}

Here we’re creating a pool of ingredients and telling AutoFixture to randomly pick one of those every time it needs to create an Ingredient object by using the Fixture.Register method.

Since we’ll be using Xunit as our test runner, you can take advantage of the AutoFixture Data Theories to keep our tests succinct by using AutoFixture in a declarative fashion. In order to do so, we need to write an xUnit Data Theory attribute that tells AutoFixture to use our new customization:

public class CookbookAutoDataAttribute : AutoDataAttribute

{

public CookbookAutoDataAttribute()

: base(new Fixture().Customize(

new RandomIngredientsFromFixedSequence())))

{

}

}

If you prefer to use AutoFixture directly in your tests, the imperative equivalent of the above is:

var fixture = new Fixture();

fixture.Customize(new RandomIngredientsFromFixedSequence());

At this point, we can finally start writing the acceptance tests to satisfy our original requirement:

public class When_searching_for_recipies_by_ingredients

{

[Theory, CookbookAutoData]

public void Should_only_return_recipes_with_a_specific_ingredient(

Cookbook sut,

Ingredient ingredient)

{

// When

var recipes = sut.FindRecipies(ingredient);

// Then

Assert.True(recipes.All(r => r.Ingredients.Contains(ingredient)));

}

[Theory, CookbookAutoData]

public void Should_include_new_recipes_with_a_specific_ingredient(

Cookbook sut,

Ingredient ingredient,

Recipe recipeWithIngredient)

{

// Given

sut.AddRecipe(recipeWithIngredient);

// When

var recipes = sut.FindRecipies(ingredient);

// Then

Assert.Contains(recipeWithIngredient, recipes);

}

}

Notice that during these tests AutoFixture will have to create Ingredient objects in a couple of different ways:

- indirectly when constructing

Recipeobjects associated to aCookbook - directly when providing arguments for the test parameters

As far as AutoFixture is concerned, it doesn’t really matter which code path leads to the creation of ingredients. The algorithm provided by the RandomIngredientsFromFixedSequence customization will apply in all situations.

…to the implementation

After a couple of Red-Green-Refactor cycles spawned from the above tests, it’s not completely unlikely that we might end up with some production code similar to this:

// Cookbook.cs

public class Cookbook

{

private readonly ICollection<Recipe> recipes;

public Cookbook(IEnumerable<Recipe> recipes)

{

this.recipes = new List<Recipe>(recipes);

}

public IEnumerable<Recipe> FindRecipies(params Ingredient[] ingredients)

{

return recipes.Where(r => r.Ingredients.Intersect(ingredients).Any());

}

public void AddRecipe(Recipe recipe)

{

this.recipes.Add(recipe);

}

}

// Recipe.cs

public class Recipe

{

public readonly IEnumerable<Ingredient> Ingredients;

public Recipe(IEnumerable<Ingredient> ingredients)

{

this.Ingredients = ingredients;

}

}

// Ingredient.cs

public class Ingredient

{

public readonly string Name;

public Ingredient(string name)

{

this.Name = name;

}

}

Nice and simple. But let’s not stop here. It’s time to take it a bit further.

An opportunity for generalization

Given the fact that we started working from a very concrete requirement, it’s only natural that the RandomIngredientsFromFixedSequence customization we came up at with encapsulates a behavior that is specific to the scenario at hand. However, if we take a closer look we might notice the following:

The only part of the algorithm that is specific to the original scenario is the type of the objects being created. The rest can easily be applied whenever you want to create objects that are picked at random from a predefined pool.

An opportunity for writing a general-purpose customization has just presented itself. We can’t let it slip.

Let’s see what happens if we extract the Ingredient type into a generic argument and remove all references to the word “ingredient”:

public class RandomFromFixedSequence<T> : ICustomization

{

private readonly Random randomizer = new Random();

private IEnumerable<T> sequence;

public void Customize(IFixture fixture)

{

InitializeSequence(fixture);

fixture.Register(PickRandomItemFromSequence);

}

private void InitializeSequence(IFixture fixture)

{

this.sequence = fixture.CreateMany<T>();

}

private T PickRandomItemFromSequence()

{

var randomIndex = this.randomizer.Next(0, sequence.Count());

return sequence.ElementAt(randomIndex);

}

}

Voilà. We just turned our scenario-specific customization into a pluggable algorithm that changes the way objects of any type are going to be generated by AutoFixture. In this case the algorithm will create items by picking them at random from a fixed sequence of T.

The CookbookAutoDataAttribute can easily changed to use the general-purpose version of the customization by closing the generic argument with the Ingredient type:

public class CookbookAutoDataAttribute : AutoDataAttribute

{

public CookbookAutoDataAttribute()

: base(new Fixture().Customize(

new RandomFromFixedSequence<Ingredient>())))

{

}

}

The same is true if you’re using AutoFixture imperatively:

var fixture = new Fixture();

fixture.Customize(new RandomFromFixedSequence<Ingredient>());

Wrapping up

As I said before, customizations are a great way to set up test data for a specific scenario. Sometimes these configurations turn out to be useful in more than just one situation.

When such opportunity arises, it’s often a good idea to separate out the parts that are specific to a particular context and turn them into parameters. This allows the customization to become a reusable strategy for controlling AutoFixture’s behavior across entire test suites.

Adventures in overclocking a Raspberry Pi

A little background

For the past couple of months I’ve been running a Raspberry Pi as my primary NAS at home. It wasn’t something that I had planned. On the contrary, it all started by chance when I received a Raspberry Pi as a conference gift at last year’s Leetspeak. But why using it as a NAS when there are much better products on the market, you might ask. Well, because I happen to have a small network closet at home and the Pi is a pretty good fit for a device that’s capable of acting like a NAS while at the same time taking very little space.

Much like the size, the setup itself also strikes with its simplicity: I plugged in an external 1 TB WD Element USB drive that I had lying around (the black box sitting above the Pi in the picture on the right), installed Raspbian on a SD memory card and went to town. Once booted, the little Pi exposes the storage space on the local network through 2 channels:

- As a Windows file share on top of the SMB protocol, through Samba

- As an Apple file share on top of the AFP protocol, through Nettalk

On top of that it also runs a headless version of the CrashPlan Linux client to backup the contents of the external drive to the cloud. So, the Pi not only works as a central storage for all my files, but it also manages to fool Mac OS X into thinking that it’s a Time Capsule. Not too bad for a tiny ARM11 700 MHz processor and 256 MB of RAM.

The need for (more) speed

A Raspberry Pi needs 5 volts of electricity to function. On top of that, depending on the number and kind of devices that you connect to it, it’ll draw approximately between 700 and 1400 milliamperes (mA) of current. This gives an average consumption of roughly 5 watts, which makes it ideal to work as an appliance that’s on 24/7. However, as impressive as all of this might be, it’s not all sunshine and rainbows. In fact, as the expectations get higher, the Pi’s limited hardware resources quickly become a frustrating bottleneck.

Luckily for us, the folks at the Raspberry Pi Foundation have made it fairly easy to squeeze more power out of the little piece of silicon by officially allowing overcloking. Now, there are a few different combinations of frequencies that you can use to boost the CPU and GPU in your Raspberry Pi.

The amount of stable overclocking that you’ll be able to achieve, however, depends on a number of physical factors, such as the quality of the soldering on the board and the amount of output that’s supported by the power supply in use. In other words, YMMV.

There are also at least a couple of different ways to go about overclocking a Raspberry Pi. I found that the most suitable one for me is to manually edit the configuration file found at /boot/config.txt. This will not only give you fine-grained control on what parts of the board to overclock, but it will also allow you to change other aspects of the process such as voltage, temperature thresholds and so on.

In my case, I managed to work my way up from the stock 700 MHz to 1 GHz through a number of small incremental steps. Here’s the final configuration I ended up with:

arm_freq=1000

core_freq=500

sdram_freq=500

over_voltage=6

force_turbo=0

One thing to notice is the force_turbo option that’s currently turned off. It’s there because, until September of last year, modifying the CPU frequencies of the Raspberry Pi would set a permanent bit inside the chip that voided the warranty.

However, having recognized the widespread interest in overclocking, the Raspberry Foundation decided to give it their blessing by building a feature into their own version of the Linux kernel called Turbo Mode. This allows the operating system to automatically increase and decrease the speed and voltage of the CPU based on much load is put on the system, thus reducing the impact on the hardware’s lifetime to effectively zero.

Setting the force_turbo option to 1 will cause the CPU to run at its full speed all the time and will apparently also contribute to setting the dreaded warranty bit in some configurations.

Entering Turbo Mode

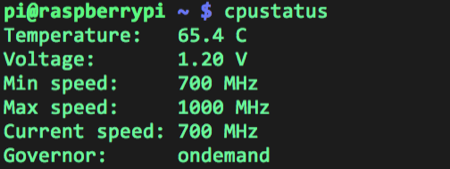

When Turbo Mode is enabled, the CPU speed and voltage will switch between two values, a minimum one and a maximum one, both of which are configurable. When it comes to speed, the default minimum is the stock 700 MHz. The default voltage is 1.20 V. During my overclocking experiments I wanted to keep a close eye on these parameters, so I wrote a simple Bash script that fetches the current state of the CPU from different sources within the system and displays a brief summary. Here’s how it looks like when the system is idle:

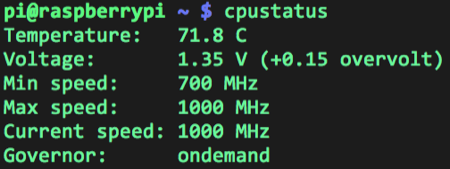

See how the current speed is equal to the minimum one? Now, take a look at how things change on full blast with the Turbo mode kicked in:

As you can see, the CPU is running hot at the maximum speed of 1 GHz fed with 0,15 extra volts.

The last line shows the governor, which is a piece of the Linux kernel driver called cpufreq that’s responsible for adjusting the speed of the processor. The governor is the strategy that regulates exactly when and how much the CPU frequency will be scaled up and down. The one that’s currently in use is called ondemand and it’s the foundation upon which the entire Turbo Mode is built.

It’s interesting to notice that the choice of governor, contrary to what you would expect, isn’t fixed. The cpufreq driver can, in fact, be configured to use a different governor during boot simply by modifying a file on disk. For example, changing from the ondemand governor to the one called powersave would block the CPU speed to its minimum value, effectively disabling Turbo Mode:

cat /sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

# Prints ondemand

echo "powersave" | /sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

cat /sys/devices/system/cpu/cpu0/cpufreq/scaling_governor

# Prints powersave

Here’s a list of available governors as seen in Raspbian:

cat /sys/devices/system/cpu/cpu0/cpufreq/scaling_available_governors

# Prints conservative ondemand userspace powersave performance

If you’re interested in seeing how they work, I encourage you to check out the cpufreq source code on GitHub. It’s very well written.

Fine tuning

I’ve managed to get a pretty decent performance boost out of my Raspberry Pi just by applying the settings shown above. However, there are still a couple of nods left to tweak before we can settle.

The ondemand governor used in the Raspberry Pi will increase the CPU speed to the maximum configured value whenever it finds it to be busy more than 95% of the time. That sounds fair enough for most cases, but if you’d like that extra speed bump even when the system is performing somewhat lighter tasks, you’ll have to lower the load threshold. This is also easily done by writing an integer value to a file:

60 > /sys/devices/system/cpu/cpufreq/ondemand/up_threshold

Here we’re saying that we’d like to have the Turbo Mode kick in when the CPU is busy at least 60% of the time. That is enough to make the Pi feel a little snappier during general use.

Wrap up

I have to say that I’ve been positively surprised by the capabilities of the Raspberry Pi. Its exceptional form factor and low power consumption makes it ideal to work as a NAS in very restricted spaces, like my network closet. Add to that the flexibility that comes from running Linux and the possibilities become truly endless. In fact, the more stuff I add to my Raspberry Pi, the more I’d like it to do. What’s next, a Node.js server?

Grokking Git by seeing it

When I first started getting into Git a couple of years ago, one of the things I found most frustrating about the learning experience was the complete lack of guidance on how to interpret the myriad of commands and switches found in the documentation. On second thought, calling it frustrating is actually an understatement. Utterly painful would be a better way to describe it. ![]() What I was looking for, was a way to represent the state of a Git repository in some sort of graphical format. In my mind, if only I could have visualized how the different combinations of commands and switches impacted my repo, I would have had a much better shot at actually understand their meaning.

What I was looking for, was a way to represent the state of a Git repository in some sort of graphical format. In my mind, if only I could have visualized how the different combinations of commands and switches impacted my repo, I would have had a much better shot at actually understand their meaning.

After a bit of research on the Git forums, I noticed that many people was using a simple text-based notation to describe the state of their repo. The actual symbols varied a bit, but they all essentially came down to something like this:

C4--C5 (feature)

/

C1--C2--C3--C4'--C5'--C6 (master)

^

where the symbols mean:

- Cn represents a single commit

- Cn’ represents a commit that has been moved from another location in history, i.e. it has been rebased

- (branch) represents a branch name

- ^ indicates the commit referenced by HEAD

This form of graphical DSL proved itself to be extremely useful not only as a learning tool but also as a universal Git language, useful for documentation as well as for communication during problem solving.

Now, keeping this idea in mind, imagine having a tool that is able to draw a similar diagram automatically. Sounds interesting? Well, let me introduce SeeGit.

SeeGit is Windows application that, given the path to a Git repository on disk, will generate a diagram of its commits and references. Once done, it will keep watching that directory for changes and automatically update the diagram accordingly.

This is where the idea for my Grokking Git by seeing it session came from. The goal is to illustrate the meaning behind different Git operations by going through a series of demos, while having the command line running on one half of the screen and SeeGit on the other. As I type away in the console you can see the Git history unfold in front of you, giving you an insight in how things work under the covers.

In other words, something like this:

So, this is just to give you a little background. Here you’ll find the session’s abstract, slides and demos. There’s also a recording from when I presented this talk at LeetSpeak in Malmö, Sweden back in October 2012. I hope you find it useful.

Abstract

In this session I’ll teach you the Git zen from the inside out. Working out of real world scenarios, I’ll walk you through Git’s fundamental building blocks and common use cases, working our way up to more advanced features. And I’ll do it by showing you graphically what happens under the covers, as we fire different Git commands.

You may already have been using Git for a while to collaborate on some open source project or even at work. You know how to commit files, create branches and merge your work with others. If that’s the case, believe me, you’ve only scratched the surface. I firmly believe that a deep understanding of Git’s inner workings is the key to unlock its true power allowing you, as a developer, to take full control of your codebase’s history.