Make your system administrator friendly with PowerShell

I know I’ve said it before, but I love the command line. And being a command line junkie, I’m naturally attracted to all kinds of tools the involve a bright blinking square on a black canvas. Historically, I’ve always been a huge fan of the mighty Bash. PowerShell, however, came to change that.

Since PowerShell made its first appearance under the codename “Monad” back in 2005, it proposed itself as more than just a regular command prompt. It brought, in fact, something completely new to the table: it combined the flexibility of a Unix-style console, such as Bash, with the richness of the .NET Framework and an object-oriented pipeline, which in itself was totally unheard of. With such a compelling story, it soon became apparent that PowerShell was aiming to become the official command line tool for Windows, replacing both the ancient Command Prompt and the often criticized Windows Script Host. And so it has been.

Seven years has passed since “Monad” was officially released as PowerShell, and its presence is as pervasive as ever. Nowadays you can expect to find PowerShell in just about all of Microsoft’s major server products, from Exchange to SQL Server. It’s even become part of Visual Studio thorugh the NuGet Package Manager Console. Not only that, but tools such as posh-git, make PowerShell a very nice, and arguably more natural, alternative to Git Bash when using Git on Windows.

Following up on my interest for PowerShell, I’ve found myself talking a fair deal about it both at conferences and user groups. In particular, during the last year or so, I’ve been giving a presentation about how to integrate PowerShell into your own applications.

The idea is to leverage the PowerShell programming model to provide a rich set of administrative tools that will (hopefully) improve the often stormy relationship between devs and admins.

Since I’m often asked about where to get the slides and the code samples from the talk, I thought I would make them all available here in one place for future reference.

So here it goes, I hope you find it useful.

Abstract

Have you ever been in a software project where the IT staff who would run the system in production, was accounted for right from the start? My guess is not very often. In fact, it’s far too rare to see functionality being built into software systems specifically to make the job of the IT administrator easier. It’s a pity, because pulling that off doesn’t require as much time and effort as you might think with tools like PowerShell.

In this session I’ll show you how to enhance an existing .NET web application with a set of administrative tools, built using the PowerShell programming model. Once that is in place, I’ll demonstrate how common maintenance tasks can either be performed manually using a traditional GUI or be fully automated through PowerShell scripts using the same code base.

Since the last few years, Microsoft itself has committed to making all of its major server products fully administrable both through traditional GUI based tools as well as PowerShell. If you’re building a server application on the .NET platform, you will soon be expected to do the same.

Better Diffs with PowerShell

I love working with the command line. In fact, I love it so much that I even use it as my primary way of interacting with the source control repositories of all the projects I’m involved in. It’s a matter of personal taste, admittedly, but there’s also a practical reason for that.

Depending on what I’m working on, I regularly have to switch among several different source control systems. Just to give you an example, just in the last six months I’ve been using Mercurial, Git, Subversion and TFS on a weekly basis. Instead of having to learn and get used to different UIs (whether it be standalone clients or IDE plugins), I find that I can be more productive by sticking to the uniform experience of the command line based tools.

To enforce my point, let me show you how to check in some code in the source control systems I mentioned above:

- Mercurial:

hg commit -m "Awesome feature" - Git:

git commit -m "Awesome feature" - Subversion:

svn commit -m "Awesome feature" - TFS:

tf checkin /comment:"Awesome feature"

As you can see, it looks pretty much the same across the board.

Of course, you need to be aware of the fundamental differences in how Distributed Version Control Systems (DVCS) such as Mercurial and Git behave compared to traditional centralized Version Control Systems (VCS) like Subversion and TFS. In addition to that, each system tries to characterize itself by having its own set of features or by solving a common problem (like branching) in a unique way. However, there aspects must be taken into consideration regardless of your client of choice. What I’m saying is that the command line interface at least offers a single point of entry into those systems, which in the end makes me more productive.

Unified DIFFs

One of the most basic features of any source control system is the ability to compare two versions of the same file to see what’s changed. The output of such comparison, or DIFF, is commonly represented in text using the Unified DIFF format, which looks something like this:

@@ -6,12 +6,10 @@

-#import <SenTestingKit/SenTestingKit.h>

-#import <UIKit/UIKit.h>

-

@interface QuoteTest : SenTestCase {

}

- (void)testQuoteForInsert_ReturnsNotNull;

+- (void)testQuoteForInsert_ReturnsPersistedQuote;

@end

In the Unified DIFF format changes are displayed at the line level through a set of well-known prefixes. The rule is simple:

A line can either be added, in which case it will be preceded by a + sign, or removed, in which case it will be preceded by a - sign. Unchanged lines are preceded by a whitespace.

In addition to that, each modified section, referred to as hunk, is preceded by a header that indicates the position and size of the section in the original and modified file respectively. For example this hunk header:

@@ -6,12 +6,10 @@

means that in the original file the modified lines start at line 6 and continue for 12 lines. In the new file, instead, that same change starts at line 6 and includes a total of 10 lines.

True Colors

At this point, you may wonder what all of this has to do with PowerShell, and rightly so. Remember when I said that I prefer to work with source control from the command line? Well, it turns out that scrolling through gobs of text in a console window isn’t always the best way to figure out what has changed between two change sets.

Fortunately, since PowerShell allows to print text in the console window using different colors, it only took a switch statement and a couple of regular expressions, to turn that wall of text into something more readable. That’s how the Out-Diff cmdlet was born:

function Out-Diff {

<#

.Synopsis

Redirects a Universal DIFF encoded text from the pipeline to the host using colors to highlight the differences.

.Description

Helper function to highlight the differences in a Universal DIFF text using color coding.

.Parameter InputObject

The text to display as Universal DIFF.

#>

[CmdletBinding()]

param(

[Parameter(Mandatory=$true, ValueFromPipeline=$true)]

[PSObject]$InputObject

)

Process {

$contentLine = $InputObject | Out-String

if ($contentLine -match "^Index:") {

Write-Host $contentLine -ForegroundColor Cyan -NoNewline

} elseif ($contentLine -match "^(\+|\-|\=){3}") {

Write-Host $contentLine -ForegroundColor Gray -NoNewline

} elseif ($contentLine -match "^\@{2}") {

Write-Host $contentLine -ForegroundColor Gray -NoNewline

} elseif ($contentLine -match "^\+") {

Write-Host $contentLine -ForegroundColor Green -NoNewline

} elseif ($contentLine -match "^\-") {

Write-Host $contentLine -ForegroundColor Red -NoNewline

} else {

Write-Host $contentLine -NoNewline

}

}

}

Let’s break this function down into logical steps:

- Take whatever input comes from the PowerShell pipeline and convert it to a string.

- Match that string against a set of regular expressions to determine whether it’s part of the Unified DIFF format.

- Print the string to the console with the appropriate color: green for added, red for removed and gray for the headers.

Pretty simple. And using it is even simpler: just load the script into your PowerShell session using dot sourcing or by adding it to your profile and redirect the output of a ‘diff’ command to the Out-Diff cmdlet through piping to start enjoying colorized DIFFs. For example the following commands:

. .\Out-Diff.ps1

git diff | Out-Diff

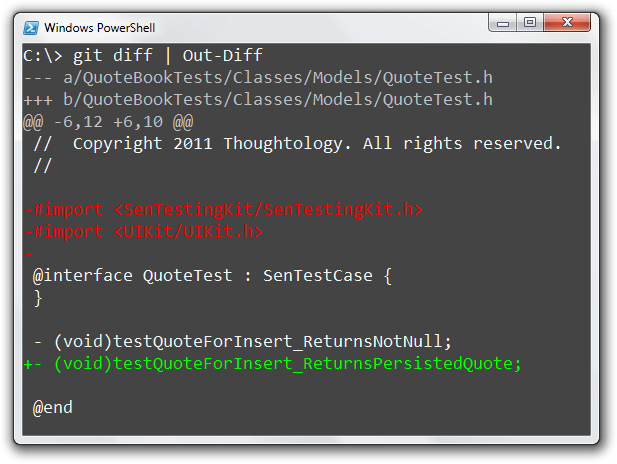

will generate this output in PowerShell:

The Out-Diff PowerShell cmdlet in action

The Out-Diff PowerShell cmdlet in action

One thing I’d like to point out is that even if the output of git diff consists of many lines of text, PowerShell will redirect them to the Out-Diff function one line at a time. This is called a streaming pipeline and it allows PowerShell to be responsive and consume less memory even when processing large amounts of data. Neat.

Wrapping up

PowerShell is an extremely versatile console. In this case, it allowed me to enhance a traditional command line tool (diff) through a simple script. Other projects, like Posh-Git and Posh-Hg, take it even further and leverage PowerShell’s rich programming model to provide a better experience on top of existing console based source control tools. If you enjoy working with the command line, I seriously encourage you to check them out.

Keep your unit tests DRY with AutoFixture Customizations

When I first incorporated AutoFixture as part of my daily unit testing workflow, I noticed how a consistent usage pattern had started to emerge. This pattern can be roughly summarized in three steps:

- Initialize an instance of the

Fixtureclass. - Configure the way different types of objects involved in the test should be created by using the

Buildmethod. - Create the actual objects with the

CreateAnonymousorCreateManymethods.

As a result, my unit tests had started to look a lot like this:

[Test]

public void WhenGettingAListOfPublishedPostsThenItShouldOnlyIncludeThose()

{

// Step 1: Initialize the Fixture

var fixture = new Fixture();

// Step 2: Configure the object creation

var draft = fixture.Build()

.With(a => a.IsDraft = true)

.CreateAnonymous();

var publishedPost = fixture.Build()

.With(a => a.IsDraft = false)

.CreateAnonymous();

fixture.Register(() => new[] { draft, publishedPost });

// Step 3: Create the anonymous objects

var posts = fixture.CreateMany();

// Act and Assert...

}

In this particular configuration, AutoFixture will satisfy all requests for IEnumerable types by returning the same array with exactly two Post objects: one with the IsDraft property set to True and one with the same property set to False.

At that point I felt pretty satisfied with the way things were shaping up: I had managed to replace entire blocks of boring object initialization code with a couple of calls to the AutoFixture API, my unit tests were getting smaller and all was good.

Duplication creeps in

After a while though, the configuration lines created in Step 2 started to repeat themselves across multiple unit tests. This was naturally due to the fact that different unit tests sometimes shared a common set of object states in their test scenario. Things weren’t so DRY anymore and suddenly it wasn’t uncommon to find code like this in the test suite:

[Test]

public void WhenGettingAListOfPublishedPostsThenItShouldOnlyIncludeThose()

{

var fixture = new Fixture();

var draft = fixture.Build()

.With(a => a.IsDraft = true)

.CreateAnonymous();

var publishedPost = fixture.Build()

.With(a => a.IsDraft = false)

.CreateAnonymous();

fixture.Register(() => new[] { draft, publishedPost });

var posts = fixture.CreateMany();

// Act and Assert...

}

[Test]

public void WhenGettingAListOfDraftsThenItShouldOnlyIncludeThose()

{

var fixture = new Fixture();

var draft = fixture.Build()

.With(a => a.IsDraft = true)

.CreateAnonymous();

var publishedPost = fixture.Build()

.With(a => a.IsDraft = false)

.CreateAnonymous();

fixture.Register(() => new[] { draft, publishedPost });

var posts = fixture.CreateMany();

// Different Act and Assert...

}

See how these two tests share the same initial state even though they verify completely different behaviors? Such blatant duplication in the test code is a problem, since it inhibits the ability to make changes. Luckily a solution was just around the corner as I discovered customizations.

Customizing your way out

A customization is a pretty general term. However, put in the context of AutoFixture it assumes a specific definition:

A customization is a group of settings that, when applied to a given Fixture, control the way AutoFixture will create anonymous instances of the types requested through that Fixture.

What that means is that I could take all the boilerplate configuration code produced during Step 2 and move it out of my unit tests into a single place, that is a customization. That allowed me to specify only once how different objects needed to be created for a given scenario, and reuse that across multiple tests.

public class MixedDraftsAndPublishedPostsCustomization : ICustomization

{

public void Customize(IFixture fixture)

{

var draft = fixture.Build()

.With(a => a.IsDraft = true)

.CreateAnonymous();

var publishedPost = fixture.Build()

.With(a => a.IsDraft = false)

.CreateAnonymous();

fixture.Register(() => new[] { draft, publishedPost });

}

}

As you can see, ICustomization is nothing more than a role interface that describes how a Fixture should be set up. In order to apply a customization to a specific Fixture instance, you’ll simply have to call the Fixture.Customize(ICustomization) method, like shown in the example below.

This newly won encapsulation allowed me to rewrite my unit tests in a much more terse way:

[Test]

public void WhenGettingAListOfDraftsThenItShouldOnlyIncludeThose()

{

// Step 1: Initialize the Fixture

var fixture = new Fixture();

// Step 2: Apply the customization for the test scenario

fixture.Customize(new MixedDraftsAndPublishedPostsCustomization());

// Step 3: Create the anonymous objects

var posts = fixture.CreateMany();

// Act and Assert...

}

The configuration logic now exists only in one place, namely a class whose name clearly describes the kind of test data it will produce. If applied consistently, this approach will in time build up a library of customizations, each representative of a given situation or scenario. Assuming that they are created at the proper level of granularity, these customizations could even be composed to form more complex scenarios.

Conclusion

Customizations in AutoFixture are a pretty powerful concept in of themselves, but they become even more effective when mapped directly to test scenarios. In fact, they represent a natural place to specify which objects are involved in a given scenario and the state they are supposed to be in. You can use them to remove duplication in your test code and, in time, build up a library of self-documenting modules, which describe the different contexts in which the system’s behavior is being verified.